Large amounts of training data are required for neural networks so they can learn representations that generalize to novel data. While visual data is easily available, annotation will also in the future be costly, thus motivating self-supervised learning. The challenge is then to have networks learn how to most effectively learn with self-supervision. We have been exploring self-supervision in areas ranging from deep metric and representation learning to visual synthesis and in applications such as behavior analysis in neuroscience.

Selected Publications

2022

Milbich, Timo; Roth, Karsten; Brattoli, Biagio; Ommer, Björn

Sharing Matters for Generalization in Deep Metric Learning Journal Article

In: IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2022.

@article{6389,

title = {Sharing Matters for Generalization in Deep Metric Learning},

author = {Timo Milbich and Karsten Roth and Biagio Brattoli and Björn Ommer},

url = {https://arxiv.org/abs/2004.05582},

doi = {10.1109/TPAMI.2020.3009620},

year = {2022},

date = {2022-01-01},

urldate = {2022-01-01},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

2021

Sanakoyeu, Artsiom; Ma, Pingchuan; Tschernezki, Vadim; Ommer, Björn

Improving Deep Metric Learning by Divide and Conquer Journal Article

In: IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021.

@article{nokey,

title = {Improving Deep Metric Learning by Divide and Conquer},

author = {Artsiom Sanakoyeu and Pingchuan Ma and Vadim Tschernezki and Björn Ommer},

url = {https://github.com/CompVis/metric-learning-divide-and-conquer-improved

https://ieeexplore.ieee.org/document/9540303},

doi = {10.1109/TPAMI.2021.3113270},

year = {2021},

date = {2021-09-16},

urldate = {2021-09-16},

journal = { IEEE Transactions on Pattern Analysis and Machine Intelligence},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Roth, Karsten; Milbich, Timo; Ommer, Björn; Cohen, Joseph Paul; Ghassemi, Marzyeh

S2SD: Simultaneous Similarity-based Self-Distillation for Deep Metric Learning Conference

Proceedings of International Conference on Machine Learning (ICML), 2021.

@conference{7051,

title = {S2SD: Simultaneous Similarity-based Self-Distillation for Deep Metric Learning},

author = {Karsten Roth and Timo Milbich and Björn Ommer and Joseph Paul Cohen and Marzyeh Ghassemi},

url = {https://github.com/MLforHealth/S2SD

https://arxiv.org/abs/2009.08348},

year = {2021},

date = {2021-01-01},

urldate = {2021-01-01},

booktitle = {Proceedings of International Conference on Machine Learning (ICML)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

2020

Milbich, Timo; Ghori, Omair; Ommer, Björn

Unsupervised Representation Learning by Discovering Reliable Image Relations Journal Article

In: Pattern Recognition, vol. 102, 2020.

@article{6339,

title = {Unsupervised Representation Learning by Discovering Reliable Image Relations},

author = {Timo Milbich and Omair Ghori and Björn Ommer},

url = {http://arxiv.org/abs/1911.07808},

year = {2020},

date = {2020-01-01},

urldate = {2020-01-01},

journal = {Pattern Recognition},

volume = {102},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Milbich, Timo; Roth, Karsten; Bharadhwaj, Homanga; Sinha, Samarth; Bengio, Yoshua; Ommer, Björn; Cohen, Joseph Paul

DiVA: Diverse Visual Feature Aggregation for Deep Metric Learning Conference

IEEE European Conference on Computer Vision (ECCV), 2020.

@conference{6934,

title = {DiVA: Diverse Visual Feature Aggregation for Deep Metric Learning},

author = {Timo Milbich and Karsten Roth and Homanga Bharadhwaj and Samarth Sinha and Yoshua Bengio and Björn Ommer and Joseph Paul Cohen},

url = {https://arxiv.org/abs/2004.13458},

year = {2020},

date = {2020-01-01},

urldate = {2020-01-01},

booktitle = {IEEE European Conference on Computer Vision (ECCV)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

Dencker, Tobias; Klinkisch, Pablo; Maul, Stefan M; Ommer, Björn

Deep learning of cuneiform sign detection with weak supervision using transliteration alignment Journal Article

In: PLoS ONE, vol. 15, 2020.

@article{7029,

title = {Deep learning of cuneiform sign detection with weak supervision using transliteration alignment},

author = {Tobias Dencker and Pablo Klinkisch and Stefan M Maul and Björn Ommer},

url = {https://hci.iwr.uni-heidelberg.de/compvis/projects/cuneiform

https://ommer-lab.com/wp-content/uploads/2021/10/Deep-Learning-of-Cuneiform-Sign-Detection-with-Weak-Supervivion-Using-Transliteration-Alignment.pdf

},

doi = {https://doi.org/10.1371/journal.pone.0243039},

year = {2020},

date = {2020-01-01},

urldate = {2020-01-01},

journal = {PLoS ONE},

volume = {15},

chapter = {1-21},

abstract = {The cuneiform script provides a glimpse into our ancient history. However, reading age-old clay tablets is time-consuming and requires years of training. To simplify this process, we propose a deep-learning based sign detector that locates and classifies cuneiform signs in images of clay tablets. Deep learning requires large amounts of training data in the form of bounding boxes around cuneiform signs, which are not readily available and costly to obtain in the case of cuneiform script. To tackle this problem, we make use of existing transliterations, a sign-by-sign representation of the tablet content in Latin script. Since these do not provide sign localization, we propose a weakly supervised approach: We align tablet images with their corresponding transliterations to localize the transliterated signs in the tablet image, before using these localized signs in place of annotations to re-train the sign detector. A better sign detector in turn boosts the quality of the alignments. We combine these steps in an iterative process that enables training a cuneiform sign detector from transliterations only. While our method works weakly supervised, a small number of annotations further boost the performance of the cuneiform sign detector which we evaluate on a large collection of clay tablets from the Neo-Assyrian period. To enable experts to directly apply the sign detector in their study of cuneiform texts, we additionally provide a web application for the analysis of clay tablets with a trained cuneiform sign detector.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

2019

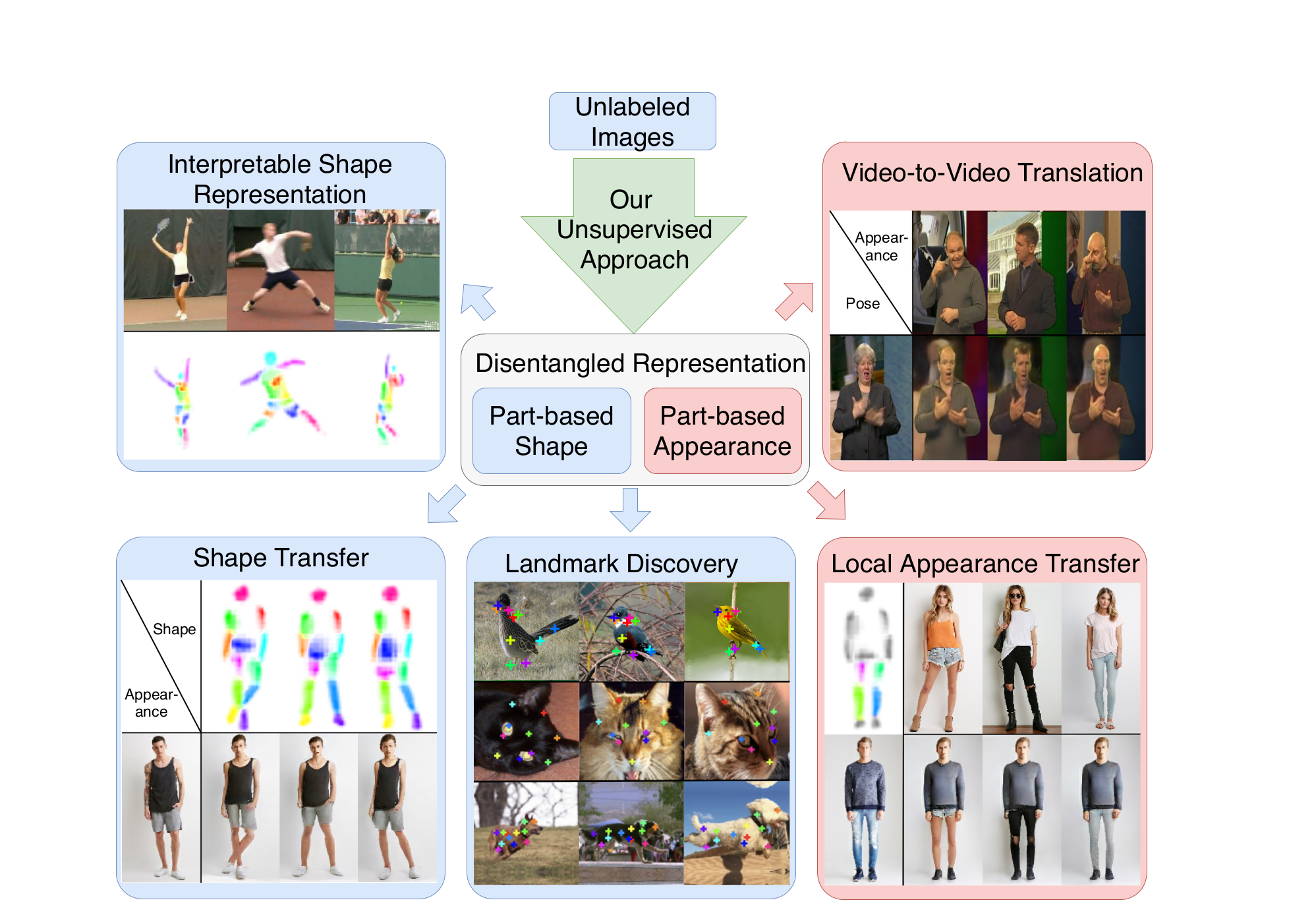

Esser, Patrick; Haux, Johannes; Ommer, Björn

Unsupervised Robust Disentangling of Latent Characteristics for Image Synthesis Conference

Proceedings of the Intl. Conf. on Computer Vision (ICCV), 2019.

@conference{6323,

title = {Unsupervised Robust Disentangling of Latent Characteristics for Image Synthesis},

author = {Patrick Esser and Johannes Haux and Björn Ommer},

url = {https://compvis.github.io/robust-disentangling/

https://arxiv.org/abs/1910.10223},

year = {2019},

date = {2019-01-01},

urldate = {2019-01-01},

booktitle = {Proceedings of the Intl. Conf. on Computer Vision (ICCV)},

abstract = {Deep generative models come with the promise to learn an explainable representation for visual objects that allows image sampling, synthesis, and selective modification. The main challenge is to learn to properly model the independent latent characteristics of an object, especially its appearance and pose. We present a novel approach that learns disentangled representations of these characteristics and explains them individually. Training requires only pairs of images depicting the same object appearance, but no pose annotations. We propose an additional classifier that estimates the minimal amount of regularization required to enforce disentanglement. Thus both representations together can completely explain an image while being independent of each other. Previous methods based on adversarial approaches fail to enforce this independence, while methods based on variational approaches lead to uninformative representations. In experiments on diverse object categories, the approach successfully recombines pose and appearance to reconstruct and retarget novel synthesized images. We achieve significant improvements over state-of-the-art methods which utilize the same level of supervision, and reach performances comparable to those of pose-supervised approaches. However, we can handle the vast body of articulated object classes for which no pose models/annotations are available.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

Lorenz, Dominik; Bereska, Leonard; Milbich, Timo; Ommer, Björn

Unsupervised Part-Based Disentangling of Object Shape and Appearance Conference

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (Oral + Best paper finalist: top 45 / 5160 submissions), 2019.

@conference{6301,

title = {Unsupervised Part-Based Disentangling of Object Shape and Appearance},

author = {Dominik Lorenz and Leonard Bereska and Timo Milbich and Björn Ommer},

url = {https://compvis.github.io/unsupervised-disentangling/

https://arxiv.org/abs/1903.06946},

year = {2019},

date = {2019-01-01},

urldate = {2019-01-01},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (Oral + Best paper finalist: top 45 / 5160 submissions)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

Sanakoyeu, A.; Tschernezki, V.; Büchler, Uta; Ommer, Björn

Divide and Conquer the Embedding Space for Metric Learning Conference

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

@conference{6299,

title = {Divide and Conquer the Embedding Space for Metric Learning},

author = {A. Sanakoyeu and V. Tschernezki and Uta Büchler and Björn Ommer},

url = {https://github.com/CompVis/metric-learning-divide-and-conquer

https://arxiv.org/abs/1906.05990},

year = {2019},

date = {2019-01-01},

urldate = {2019-01-01},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

Brattoli, Biagio; Roth, Karsten; Ommer, Björn

MIC: Mining Interclass Characteristics for Improved Metric Learning Conference

Proceedings of the Intl. Conf. on Computer Vision (ICCV), 2019.

@conference{6321,

title = {MIC: Mining Interclass Characteristics for Improved Metric Learning},

author = {Biagio Brattoli and Karsten Roth and Björn Ommer},

url = {https://github.com/Confusezius/ICCV2019_MIC

https://arxiv.org/abs/1909.11574},

year = {2019},

date = {2019-01-01},

urldate = {2019-01-01},

booktitle = {Proceedings of the Intl. Conf. on Computer Vision (ICCV)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

2018

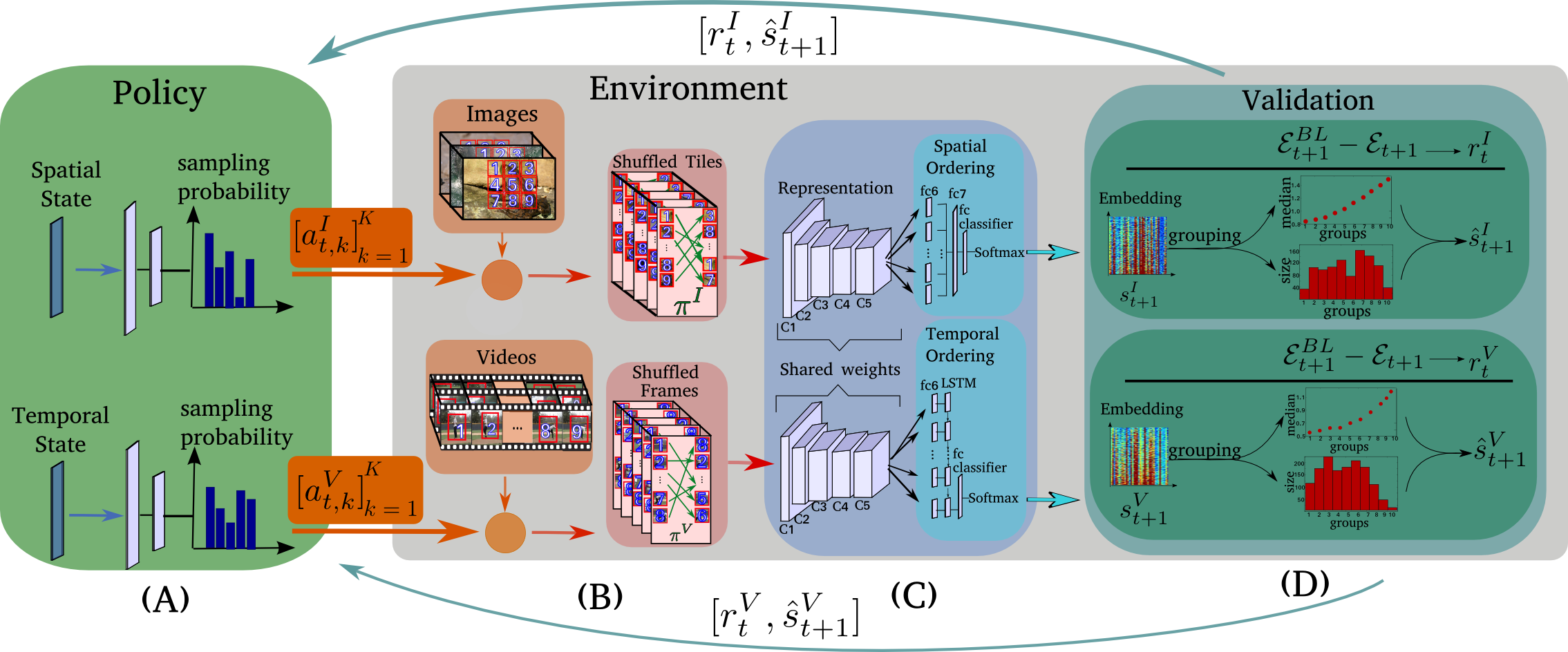

Büchler, Uta; Brattoli, Biagio; Ommer, Björn

Improving Spatiotemporal Self-Supervision by Deep Reinforcement Learning Conference

Proceedings of the European Conference on Computer Vision (ECCV), (UB and BB contributed equally), Munich, Germany, 2018.

@conference{buechler:ECCV:2018,

title = {Improving Spatiotemporal Self-Supervision by Deep Reinforcement Learning},

author = {Uta Büchler and Biagio Brattoli and Björn Ommer},

url = {https://arxiv.org/abs/1807.11293

https://hci.iwr.uni-heidelberg.de/sites/default/files/publications/files/1855931744/buechler_eccv18_poster.pdf},

year = {2018},

date = {2018-01-01},

urldate = {2018-01-01},

booktitle = {Proceedings of the European Conference on Computer Vision (ECCV)},

publisher = {(UB and BB contributed equally)},

address = {Munich, Germany},

abstract = {Self-supervised learning of convolutional neural networks can harness large amounts of cheap unlabeled data to train powerful feature representations. As surrogate task, we jointly address ordering of visual data in the spatial and temporal domain. The permutations of training samples, which are at the core of self-supervision by ordering, have so far been sampled randomly from a fixed preselected set. Based on deep reinforcement learning we propose a sampling policy that adapts to the state of the network, which is being trained. Therefore, new permutations are sampled according to their expected utility for updating the convolutional feature representation. Experimental evaluation on unsupervised and transfer learning tasks demonstrates competitive performance on standard benchmarks for image and video classification and nearest neighbor retrieval.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

Sayed, N.; Brattoli, Biagio; Ommer, Björn

Cross and Learn: Cross-Modal Self-Supervision Conference

German Conference on Pattern Recognition (GCPR) (Oral), Stuttgart, Germany, 2018.

@conference{sayed:GCPR:2018,

title = {Cross and Learn: Cross-Modal Self-Supervision},

author = {N. Sayed and Biagio Brattoli and Björn Ommer},

url = {https://arxiv.org/abs/1811.03879v1

https://bbrattoli.github.io/publications/images/sayed_crossandlearn.pdf},

year = {2018},

date = {2018-01-01},

urldate = {2018-01-01},

booktitle = {German Conference on Pattern Recognition (GCPR) (Oral)},

address = {Stuttgart, Germany},

abstract = {In this paper we present a self-supervised method to learn feature representations for different modalities. Based on the observation that cross-modal information has a high semantic meaning we propose a method to effectively exploit this signal. For our method we utilize video data since it is available on a large scale and provides easily accessible modalities given by RGB and optical flow. We demonstrate state-of-the-art performance on highly contested action recognition datasets in the context of self-supervised learning. We also show the transferability of our feature representations and conduct extensive ablation studies to validate our core contributions.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

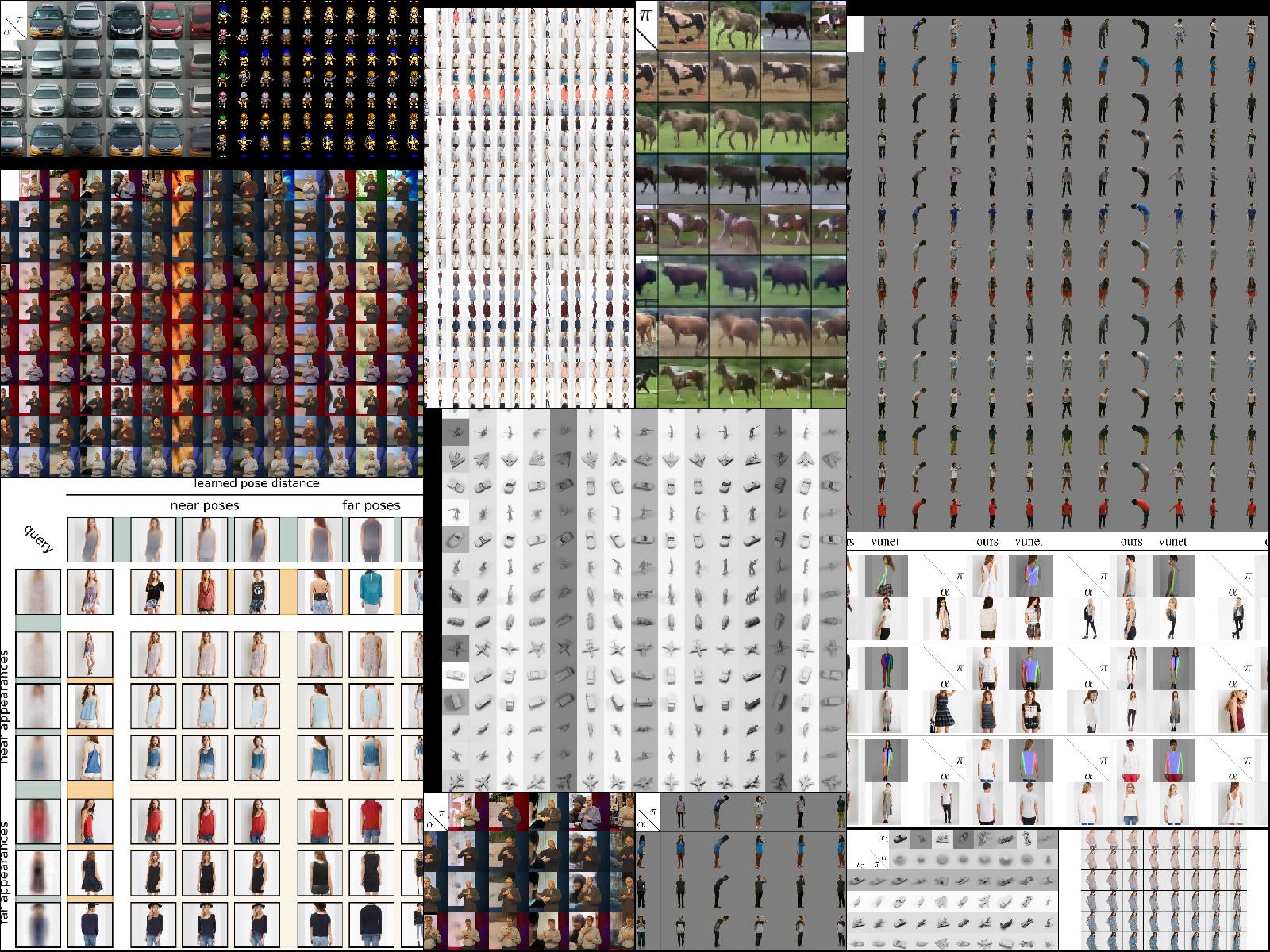

Sanakoyeu, A.; Bautista, Miguel; Ommer, Björn

Deep Unsupervised Learning of Visual Similarities Journal Article

In: Pattern Recognition, vol. 78, 2018.

@article{6229,

title = {Deep Unsupervised Learning of Visual Similarities},

author = {A. Sanakoyeu and Miguel Bautista and Björn Ommer},

url = {https://arxiv.org/abs/1802.08562},

doi = {https://doi.org/10.1016/j.patcog.2018.01.036},

year = {2018},

date = {2018-01-01},

urldate = {2018-01-01},

journal = {Pattern Recognition},

volume = {78},

chapter = {331},

abstract = {Exemplar learning of visual similarities in an unsupervised manner is a problem of paramount importance to Computer Vision. In this context, however, the recent breakthrough in deep learning could not yet unfold its full potential. With only a single positive sample, a great imbalance between one positive and many negatives, and unreliable relationships between most samples, training of Convolutional Neural networks is impaired. In this paper we use weak estimates of local similarities and propose a single optimization problem to extract batches of samples with mutually consistent relations. Conflicting relations are distributed over different batches and similar samples are grouped into compact groups. Learning visual similarities is then framed as a sequence of categorization tasks. The CNN then consolidates transitivity relations within and between groups and learns a single representation for all samples without the need for labels. The proposed unsupervised approach has shown competitive performance on detailed posture analysis and object classification.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

2017

Bautista, Miguel; Sanakoyeu, A.; Ommer, Björn

Deep Unsupervised Similarity Learning using Partially Ordered Sets Conference

The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

@conference{6200,

title = {Deep Unsupervised Similarity Learning using Partially Ordered Sets},

author = {Miguel Bautista and A. Sanakoyeu and Björn Ommer},

url = {https://github.com/asanakoy/deep_unsupervised_posets

https://arxiv.org/abs/1704.02268},

year = {2017},

date = {2017-01-01},

urldate = {2017-01-01},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

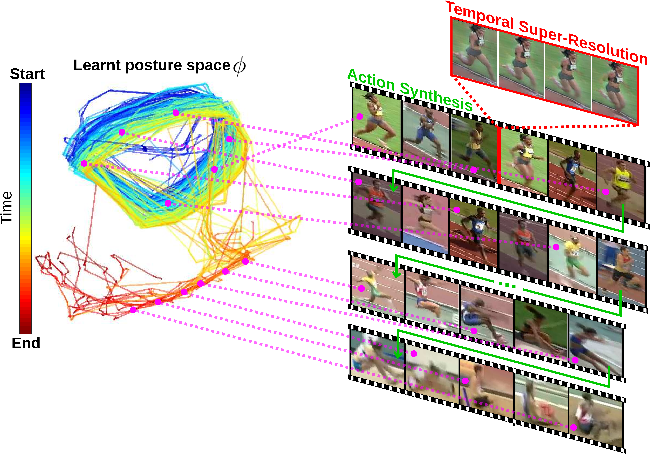

Milbich, Timo; Bautista, Miguel; Sutter, Ekaterina; Ommer, Björn

Unsupervised Video Understanding by Reconciliation of Posture Similarities Conference

Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2017.

@conference{6187,

title = {Unsupervised Video Understanding by Reconciliation of Posture Similarities},

author = {Timo Milbich and Miguel Bautista and Ekaterina Sutter and Björn Ommer},

url = {https://hci.iwr.uni-heidelberg.de/compvis/research/tmilbich_iccv17

https://arxiv.org/abs/1708.01191},

year = {2017},

date = {2017-01-01},

urldate = {2017-01-01},

booktitle = {Proceedings of the IEEE International Conference on Computer Vision (ICCV)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

2016

Bautista, Miguel; Sanakoyeu, A.; Sutter, E.; Ommer, Björn

CliqueCNN: Deep Unsupervised Exemplar Learning Conference

Proceedings of the Conference on Advances in Neural Information Processing Systems (NIPS), MIT Press, Barcelona, 2016.

@conference{arXiv:1608.08792,

title = {CliqueCNN: Deep Unsupervised Exemplar Learning},

author = {Miguel Bautista and A. Sanakoyeu and E. Sutter and Björn Ommer},

url = {https://github.com/asanakoy/cliquecnn

https://arxiv.org/abs/1608.08792},

year = {2016},

date = {2016-01-01},

urldate = {2016-01-01},

booktitle = {Proceedings of the Conference on Advances in Neural Information Processing Systems (NIPS)},

publisher = {MIT Press},

address = {Barcelona},

abstract = {Exemplar learning is a powerful paradigm for discovering visual similarities in

an unsupervised manner. In this context, however, the recent breakthrough in

deep learning could not yet unfold its full potential. With only a single positive

sample, a great imbalance between one positive and many negatives, and unreliable

relationships between most samples, training of Convolutional Neural networks is

impaired. Given weak estimates of local distance we propose a single optimization

problem to extract batches of samples with mutually consistent relations. Conflict-

ing relations are distributed over different batches and similar samples are grouped

into compact cliques. Learning exemplar similarities is framed as a sequence of

clique categorization tasks. The CNN then consolidates transitivity relations within

and between cliques and learns a single representation for all samples without

the need for labels. The proposed unsupervised approach has shown competitive

performance on detailed posture analysis and object classification.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

an unsupervised manner. In this context, however, the recent breakthrough in

deep learning could not yet unfold its full potential. With only a single positive

sample, a great imbalance between one positive and many negatives, and unreliable

relationships between most samples, training of Convolutional Neural networks is

impaired. Given weak estimates of local distance we propose a single optimization

problem to extract batches of samples with mutually consistent relations. Conflict-

ing relations are distributed over different batches and similar samples are grouped

into compact cliques. Learning exemplar similarities is framed as a sequence of

clique categorization tasks. The CNN then consolidates transitivity relations within

and between cliques and learns a single representation for all samples without

the need for labels. The proposed unsupervised approach has shown competitive

performance on detailed posture analysis and object classification.