The large intra-class variability of articulated objects, e.g., animal bodies, poses a significant challenge for representation learning. Furthermore, the analysis of posture and behavior is of fundamental importance for numerous applications, esp. in biomedicine. Our goal is a model-free approach that learns detailed representations of human and animal posture and temporal kinematics without requiring a preconceived body model or tedious manual annotation of keypoints. Being keypoint-free and rather learning the actual articulation and degrees of freedom that are present in the data avoids introducing model or annotation biases. Our ultimate (application) goal is, thus, an unsupervised, non-invasive, unbiased diagnostic analysis based on behavior analytics.

This site provides a (selective) overview of our research on behavior analysis and its various applications. For a comprehensive list, please visit our publication page.

2022

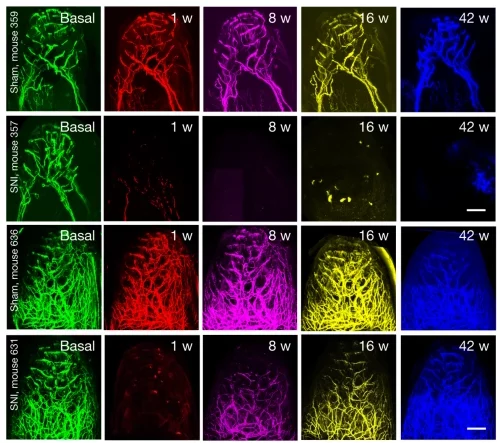

Gangadharan, Vijayan; Zheng, Hongwei; Taberner, Francisco J.; Landry, Jonathan; Nees, Timo A.; Pistolic, Jelena; Agarwal, Nitin; Männich, Deepitha; Benes, Vladimir; Helmstaedter, Moritz; Ommer, Björn; Lechner, Stefan G.; Kuner, Thomas; Kuner, Rohini

Neuropathic pain caused by miswiring and abnormal end organ targeting Journal Article

In: Nature, vol. 606, pp. 137–145, 2022.

@article{nokey,

title = {Neuropathic pain caused by miswiring and abnormal end organ targeting},

author = {Vijayan Gangadharan and Hongwei Zheng and Francisco J. Taberner and Jonathan Landry and Timo A. Nees and Jelena Pistolic and Nitin Agarwal and Deepitha Männich and Vladimir Benes and Moritz Helmstaedter and Björn Ommer and Stefan G. Lechner and Thomas Kuner and Rohini Kuner },

url = {https://www.nature.com/articles/s41586-022-04777-z},

year = {2022},

date = {2022-01-01},

urldate = {2022-05-25},

journal = {Nature},

volume = {606},

pages = {137–145},

abstract = {Nerve injury leads to chronic pain and exaggerated sensitivity to gentle touch (allodynia) as well as a loss of sensation in the areas in which injured and non-injured nerves come together. The mechanisms that disambiguate these mixed and paradoxical symptoms are unknown. Here we longitudinally and non-invasively imaged genetically labelled populations of fibres that sense noxious stimuli (nociceptors) and gentle touch (low-threshold afferents) peripherally in the skin for longer than 10 months after nerve injury, while simultaneously tracking pain-related behaviour in the same mice. Fully denervated areas of skin initially lost sensation, gradually recovered normal sensitivity and developed marked allodynia and aversion to gentle touch several months after injury. This reinnervation-induced neuropathic pain involved nociceptors that sprouted into denervated territories precisely reproducing the initial pattern of innervation, were guided by blood vessels and showed irregular terminal connectivity in the skin and lowered activation thresholds mimicking low-threshold afferents. By contrast, low-threshold afferents—which normally mediate touch sensation as well as allodynia in intact nerve territories after injury—did not reinnervate, leading to an aberrant innervation of tactile end organs such as Meissner corpuscles with nociceptors alone. Genetic ablation of nociceptors fully abrogated reinnervation allodynia. Our results thus reveal the emergence of a form of chronic neuropathic pain that is driven by structural plasticity, abnormal terminal connectivity and malfunction of nociceptors during reinnervation, and provide a mechanistic framework for the paradoxical sensory manifestations that are observed clinically and can impose a heavy burden on patients.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

2021

Sardari, Faegheh; Ommer, Björn; Mirmehdi, Majid

Unsupervised View-Invariant Human Posture Representation Conference

British Machine Vision Conference (BMVC), 2021.

@conference{nokey,

title = {Unsupervised View-Invariant Human Posture Representation},

author = {Faegheh Sardari and Björn Ommer and Majid Mirmehdi},

url = {https://arxiv.org/abs/2109.08730},

year = {2021},

date = {2021-10-18},

urldate = {2021-10-18},

booktitle = {British Machine Vision Conference (BMVC)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

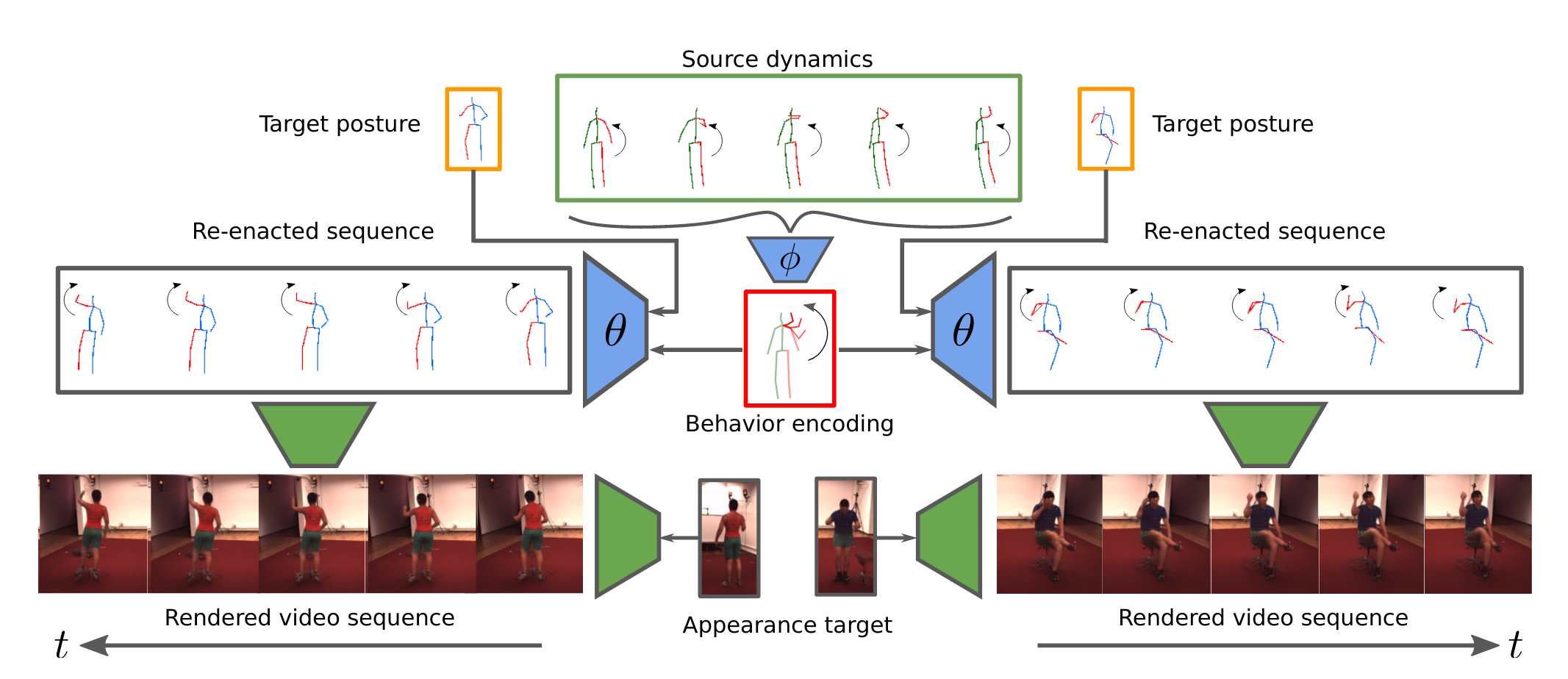

Blattmann, Andreas; Milbich, Timo; Dorkenwald, Michael; Ommer, Björn

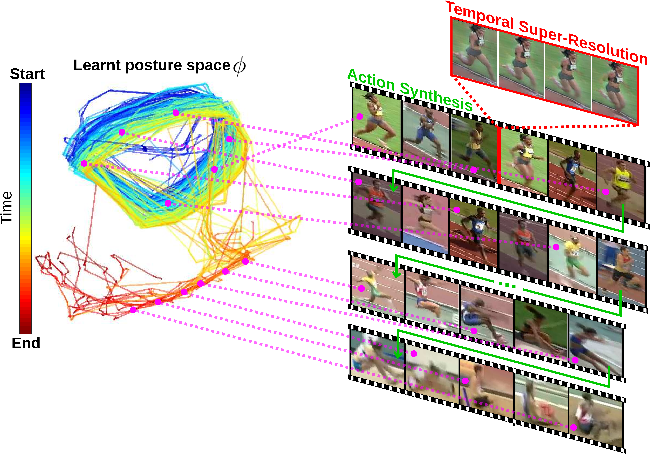

Behavior-Driven Synthesis of Human Dynamics Conference

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

@conference{7044,

title = {Behavior-Driven Synthesis of Human Dynamics},

author = {Andreas Blattmann and Timo Milbich and Michael Dorkenwald and Björn Ommer},

url = {https://compvis.github.io/behavior-driven-video-synthesis/

https://arxiv.org/abs/2103.04677},

year = {2021},

date = {2021-01-01},

urldate = {2021-01-01},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

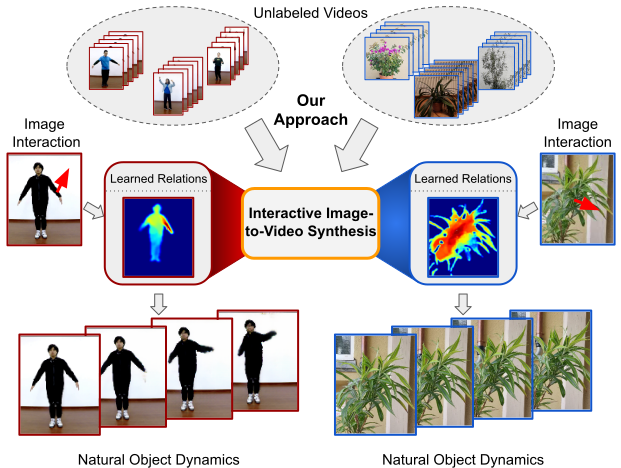

Blattmann, Andreas; Milbich, Timo; Dorkenwald, Michael; Ommer, Björn

Understanding Object Dynamics for Interactive Image-to-Video Synthesis Conference

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

@conference{7063,

title = {Understanding Object Dynamics for Interactive Image-to-Video Synthesis},

author = {Andreas Blattmann and Timo Milbich and Michael Dorkenwald and Björn Ommer},

url = {https://compvis.github.io/interactive-image2video-synthesis/

https://arxiv.org/abs/2106.11303v1},

year = {2021},

date = {2021-01-01},

urldate = {2021-01-01},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

abstract = {What would be the effect of locally poking a static scene? We present an approach that learns naturally-looking global articulations caused by a local manipulation at a pixel level. Training requires only videos of moving objects but no information of the underlying manipulation of the physical scene. Our generative model learns to infer natural object dynamics as a response to user interaction and learns about the interrelations between different object body regions. Given a static image of an object and a local poking of a pixel, the approach then predicts how the object would deform over time. In contrast to existing work on video prediction, we do not synthesize arbitrary realistic videos but enable local interactive control of the deformation. Our model is not restricted to particular object categories and can transfer dynamics onto novel unseen object instances. Extensive experiments on diverse objects demonstrate the effectiveness of our approach compared to common video prediction frameworks.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

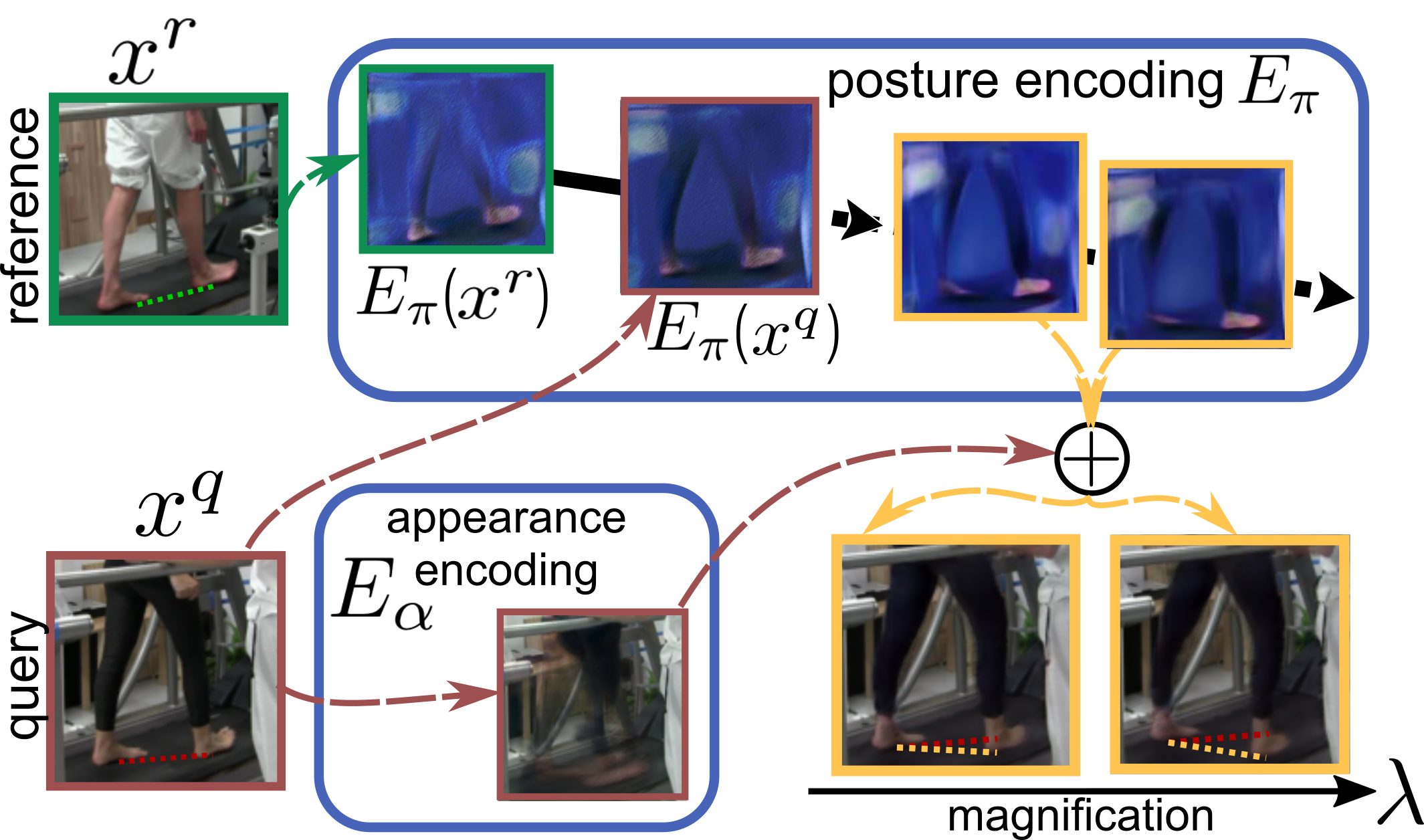

Brattoli, Biagio; Büchler, Uta; Dorkenwald, Michael; Reiser, Philipp; Filli, Linard; Helmchen, Fritjof; Wahl, Anna-Sophia; Ommer, Björn

Unsupervised behaviour analysis and magnification (uBAM) using deep learning Journal Article

In: Nature Machine Intelligence, 2021.

@article{7045,

title = {Unsupervised behaviour analysis and magnification (uBAM) using deep learning},

author = {Biagio Brattoli and Uta Büchler and Michael Dorkenwald and Philipp Reiser and Linard Filli and Fritjof Helmchen and Anna-Sophia Wahl and Björn Ommer},

url = {https://utabuechler.github.io/behaviourAnalysis/

https://rdcu.be/ch6pL},

doi = {https://doi.org/10.1038/s42256-021-00326-x},

year = {2021},

date = {2021-01-01},

urldate = {2021-01-01},

journal = {Nature Machine Intelligence},

abstract = {Motor behaviour analysis is essential to biomedical research and clinical diagnostics as it provides a non-invasive strategy for identifying motor impairment and its change caused by interventions. State-of-the-art instrumented movement analysis is time- and cost-intensive, because it requires the placement of physical or virtual markers. As well as the effort required for marking the keypoints or annotations necessary for training or fine-tuning a detector, users need to know the interesting behaviour beforehand to provide meaningful keypoints. Here, we introduce unsupervised behaviour analysis and magnification (uBAM), an automatic deep learning algorithm for analysing behaviour by discovering and magnifying deviations. A central aspect is unsupervised learning of posture and behaviour representations to enable an objective comparison of movement. Besides discovering and quantifying deviations in behaviour, we also propose a generative model for visually magnifying subtle behaviour differences directly in a video without requiring a detour via keypoints or annotations. Essential for this magnification of deviations, even across different individuals, is a disentangling of appearance and behaviour. Evaluations on rodents and human patients with neurological diseases demonstrate the wide applicability of our approach. Moreover, combining optogenetic stimulation with our unsupervised behaviour analysis shows its suitability as a non-invasive diagnostic tool correlating function to brain plasticity.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

2020

Dorkenwald, Michael; Büchler, Uta; Ommer, Björn

Unsupervised Magnification of Posture Deviations Across Subjects Conference

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

@conference{7042,

title = {Unsupervised Magnification of Posture Deviations Across Subjects},

author = {Michael Dorkenwald and Uta Büchler and Björn Ommer},

url = {https://compvis.github.io/magnify-posture-deviations/

https://openaccess.thecvf.com/content_CVPR_2020/papers/Dorkenwald_Unsupervised_Magnification_of_Posture_Deviations_Across_Subjects_CVPR_2020_paper.pdf},

year = {2020},

date = {2020-01-01},

urldate = {2020-01-01},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

2017

Milbich, Timo; Bautista, Miguel; Sutter, Ekaterina; Ommer, Björn

Unsupervised Video Understanding by Reconciliation of Posture Similarities Conference

Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2017.

@conference{6187,

title = {Unsupervised Video Understanding by Reconciliation of Posture Similarities},

author = {Timo Milbich and Miguel Bautista and Ekaterina Sutter and Björn Ommer},

url = {https://hci.iwr.uni-heidelberg.de/compvis/research/tmilbich_iccv17

https://arxiv.org/abs/1708.01191},

year = {2017},

date = {2017-01-01},

urldate = {2017-01-01},

booktitle = {Proceedings of the IEEE International Conference on Computer Vision (ICCV)},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

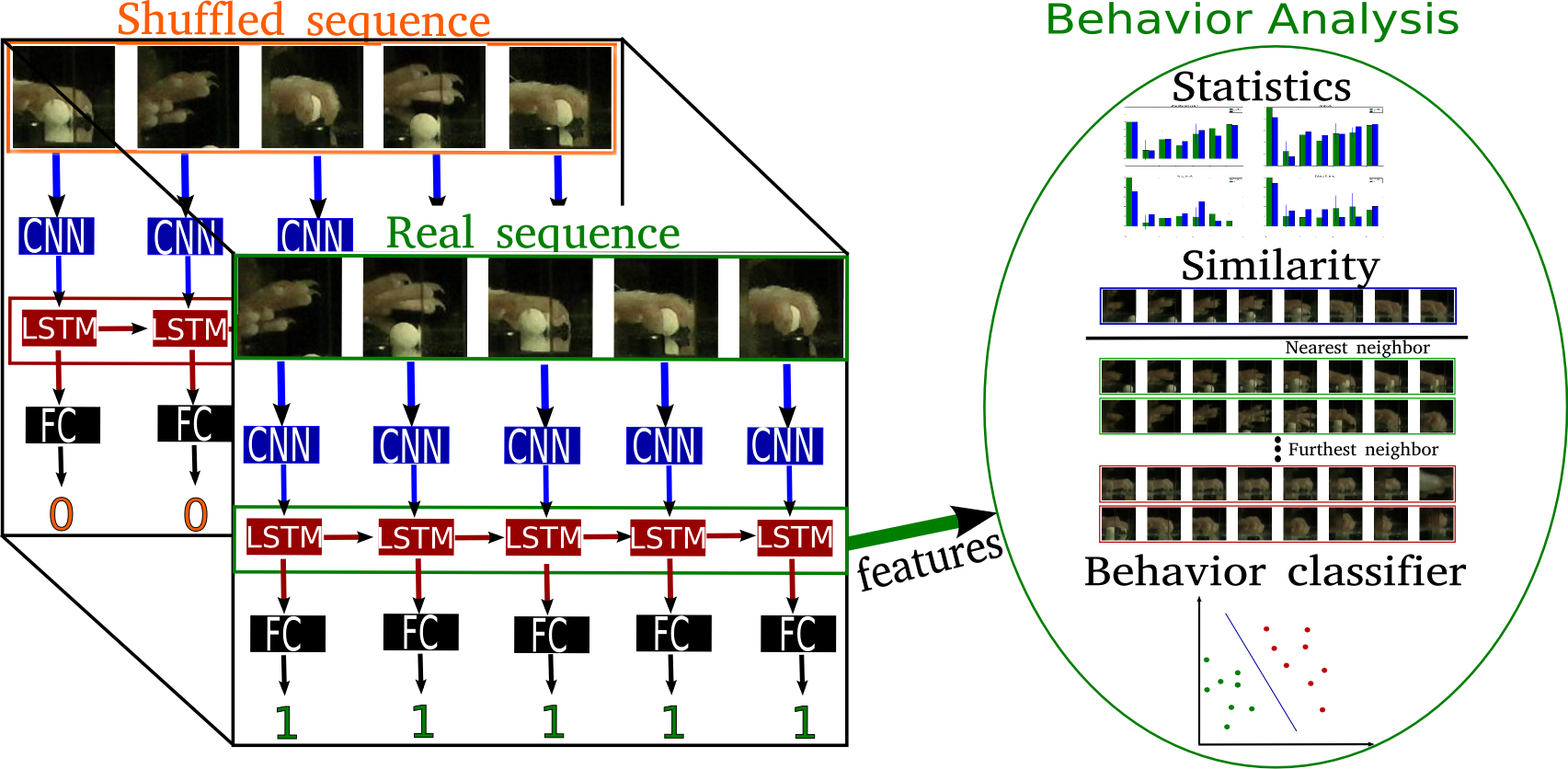

Brattoli, Biagio; Büchler, Uta; Wahl, Anna-Sophia; Schwab, M. E.; Ommer, Björn

LSTM Self-Supervision for Detailed Behavior Analysis Conference

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (BB and UB contributed equally), 2017.

@conference{buechler:CVPR:2017,

title = {LSTM Self-Supervision for Detailed Behavior Analysis},

author = {Biagio Brattoli and Uta Büchler and Anna-Sophia Wahl and M. E. Schwab and Björn Ommer},

url = {https://ommer-lab.com/wp-content/uploads/2021/10/LSTM-Self-Supervision-for-Detailed-Behavior-Analysis.pdf

},

year = {2017},

date = {2017-01-01},

urldate = {2017-01-01},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

publisher = {(BB and UB contributed equally)},

abstract = {Behavior analysis provides a crucial non-invasive and

easily accessible diagnostic tool for biomedical research.

A detailed analysis of posture changes during skilled mo-

tor tasks can reveal distinct functional deficits and their

restoration during recovery. Our specific scenario is based

on a neuroscientific study of rodents recovering from a large

sensorimotor cortex stroke and skilled forelimb grasping is

being recorded. Given large amounts of unlabeled videos

that are recorded during such long-term studies, we seek

an approach that captures fine-grained details of posture

and its change during rehabilitation without costly manual

supervision. Therefore, we utilize self-supervision to au-

tomatically learn accurate posture and behavior represen-

tations for analyzing motor function. Learning our model

depends on the following fundamental elements: (i) limb

detection based on a fully convolutional network is ini-

tialized solely using motion information, (ii) a novel self-

supervised training of LSTMs using only temporal permu-

tation yields a detailed representation of behavior, and (iii)

back-propagation of this sequence representation also im-

proves the description of individual postures. We establish a

novel test dataset with expert annotations for evaluation of

fine-grained behavior analysis. Moreover, we demonstrate

the generality of our approach by successfully applying it to

self-supervised learning of human posture on two standard

benchmark datasets.},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

easily accessible diagnostic tool for biomedical research.

A detailed analysis of posture changes during skilled mo-

tor tasks can reveal distinct functional deficits and their

restoration during recovery. Our specific scenario is based

on a neuroscientific study of rodents recovering from a large

sensorimotor cortex stroke and skilled forelimb grasping is

being recorded. Given large amounts of unlabeled videos

that are recorded during such long-term studies, we seek

an approach that captures fine-grained details of posture

and its change during rehabilitation without costly manual

supervision. Therefore, we utilize self-supervision to au-

tomatically learn accurate posture and behavior represen-

tations for analyzing motor function. Learning our model

depends on the following fundamental elements: (i) limb

detection based on a fully convolutional network is ini-

tialized solely using motion information, (ii) a novel self-

supervised training of LSTMs using only temporal permu-

tation yields a detailed representation of behavior, and (iii)

back-propagation of this sequence representation also im-

proves the description of individual postures. We establish a

novel test dataset with expert annotations for evaluation of

fine-grained behavior analysis. Moreover, we demonstrate

the generality of our approach by successfully applying it to

self-supervised learning of human posture on two standard

benchmark datasets.

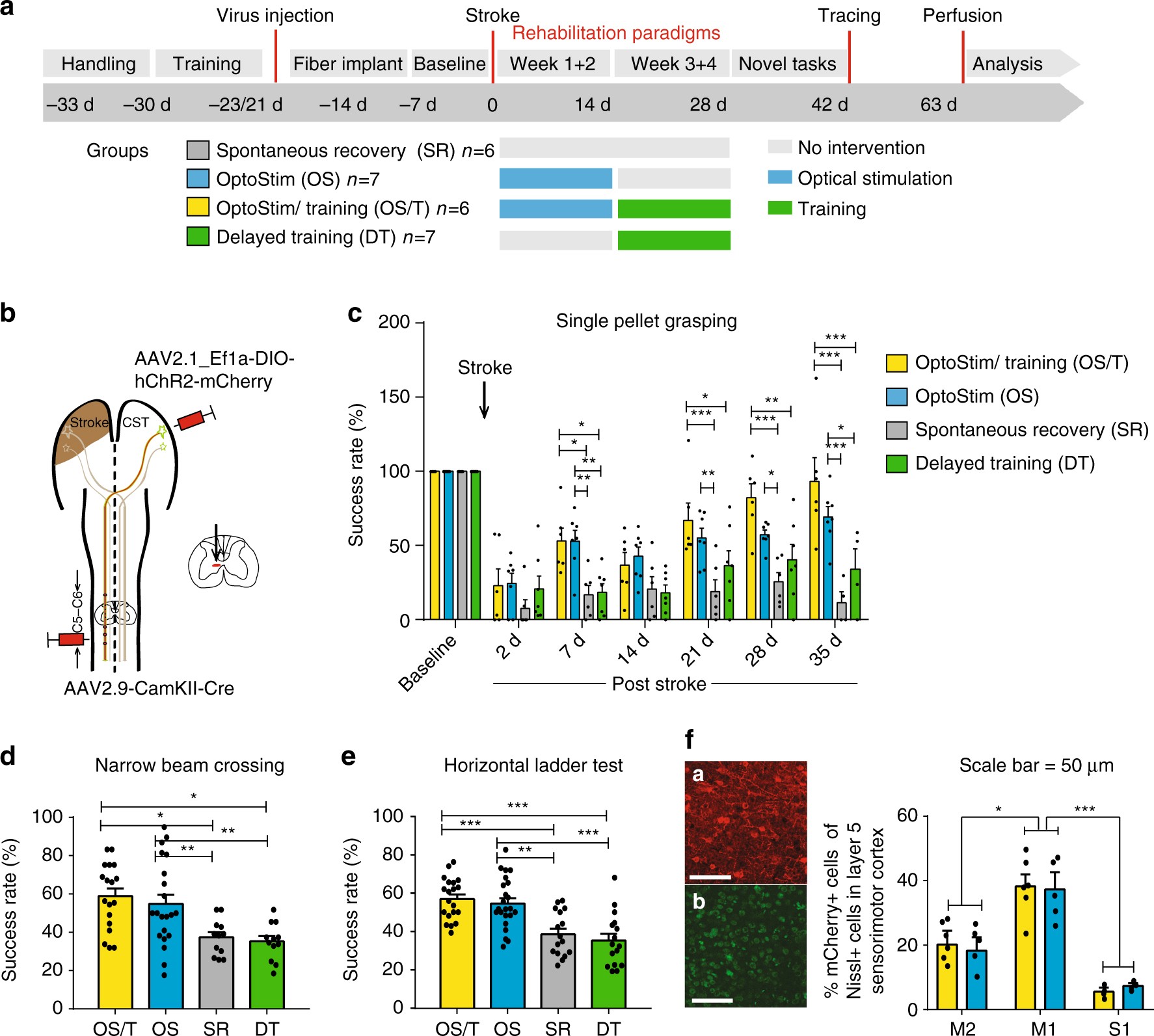

Wahl, Anna-Sophia; Büchler, Uta; Brändli, A.; Brattoli, Biagio; Musall, S.; Kasper, H.; Ineichen, B. V.; Helmchen, F.; Ommer, Björn; Schwab, M. E.

Optogenetically stimulating the intact corticospinal tract post-stroke restores motor control through regionalized functional circuit formation Journal Article

In: Nature Communications, 2017.

@article{nokey,

title = {Optogenetically stimulating the intact corticospinal tract post-stroke restores motor control through regionalized functional circuit formation},

author = {Anna-Sophia Wahl and Uta Büchler and A. Brändli and Biagio Brattoli and S. Musall and H. Kasper and B.V. Ineichen and F. Helmchen and Björn Ommer and M. E. Schwab},

url = {https://www.nature.com/articles/s41467-017-01090-6},

doi = {10.1038/s41467-017-01090-6},

year = {2017},

date = {2017-01-01},

urldate = {2017-01-01},

journal = {Nature Communications},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

2015

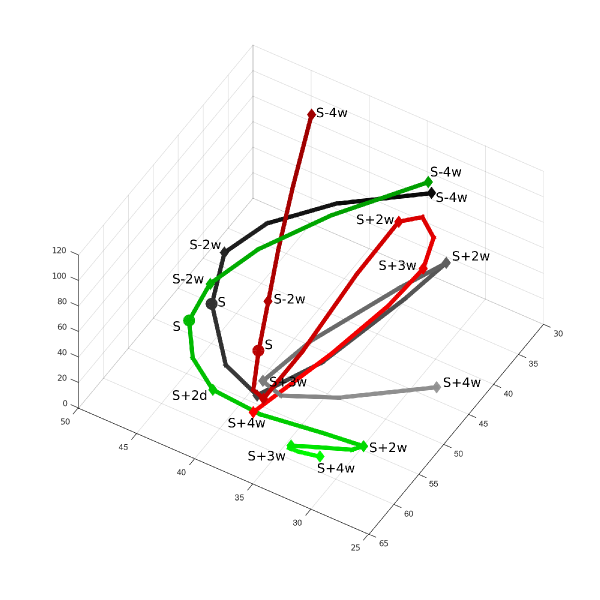

Antic, B.; Büchler, Uta; Wahl, Anna-Sophia; Schwab, M. E.; Ommer, Björn

Spatiotemporal Parsing of Motor Kinematics for Assessing Stroke Recovery Conference

Medical Image Computing and Computer-Assisted Intervention, Springer, 2015.

@conference{antic:MICCAI:2015,

title = {Spatiotemporal Parsing of Motor Kinematics for Assessing Stroke Recovery},

author = {B. Antic and Uta Büchler and Anna-Sophia Wahl and M. E. Schwab and Björn Ommer},

url = {https://ommer-lab.com/wp-content/uploads/2021/10/Spatiotemporal-Parsing-of-Motor-Kinemtaics-for-Assessing-Stroke-Recovery.pdf},

doi = {https://doi.org/10.1007/978-3-319-24574-4_56},

year = {2015},

date = {2015-01-01},

urldate = {2015-01-01},

booktitle = {Medical Image Computing and Computer-Assisted Intervention},

publisher = {Springer},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

2014

Wahl, Anna-Sophia; Omlor, W.; Rubio, J. C.; Chen, J. L.; Zheng, H.; Schröter, A.; Gullo, M.; Weinmann, O.; Kobayashi, K.; Helmchen, F.; Ommer, Björn; Schwab, M. E.

Asynchronous Therapy Restores Motor Control by Rewiring of the Rat Corticospinal Tract after Stroke Journal Article

In: Science, vol. 344, no. 6189, pp. 1250–1255, 2014.

@article{Wahl:Science:2014,

title = {Asynchronous Therapy Restores Motor Control by Rewiring of the Rat Corticospinal Tract after Stroke},

author = {Anna-Sophia Wahl and W. Omlor and J. C. Rubio and J. L. Chen and H. Zheng and A. Schröter and M. Gullo and O. Weinmann and K. Kobayashi and F. Helmchen and Björn Ommer and M. E. Schwab},

url = {https://www.science.org/doi/abs/10.1126/science.1253050},

doi = {https://doi.org/10.1126/science.1253050},

year = {2014},

date = {2014-01-01},

urldate = {2014-01-01},

journal = {Science},

volume = {344},

number = {6189},

pages = {1250--1255},

publisher = {American Association for The Advancement of Science},

keywords = {},

pubstate = {published},

tppubtype = {article}

}